DeepSeek R1 is open source and ready to try.

This AI is powerful—and affordable. Credit: DeepSeek

There’s a new AI competitor, and it’s catching attention for good reason.

On Monday, Chinese AI company DeepSeek launched an open-source large language model called DeepSeek R1.

According to DeepSeek, R1 outperforms other well-known large language models like OpenAI’s in several key performance benchmarks. It excels in math, coding, and reasoning tasks.

DeepSeek R1 builds on its predecessor, R1 Zero, which skipped a common training method called supervised fine-tuning. While Zero was strong in some areas, it struggled with readability and language clarity. R1 addresses these issues with enhanced training methods, including multi-stage training and using cold-start data, followed by reinforcement learning.

If you’re not into the technical details, here’s what matters: R1 is open source, meaning experts can review it for privacy and security concerns. It’s also free to use as a web app, and its API access is incredibly affordable—just $0.14 for one million input tokens, compared to OpenAI’s $7.50 for the same tier of reasoning power.

Most impressively, this model is highly capable. For example, I challenged R1 to create a complex web app that pulls public data and displays travel and weather information for tourists. It generated functional HTML code instantly and improved it further based on my feedback, optimizing along the way.

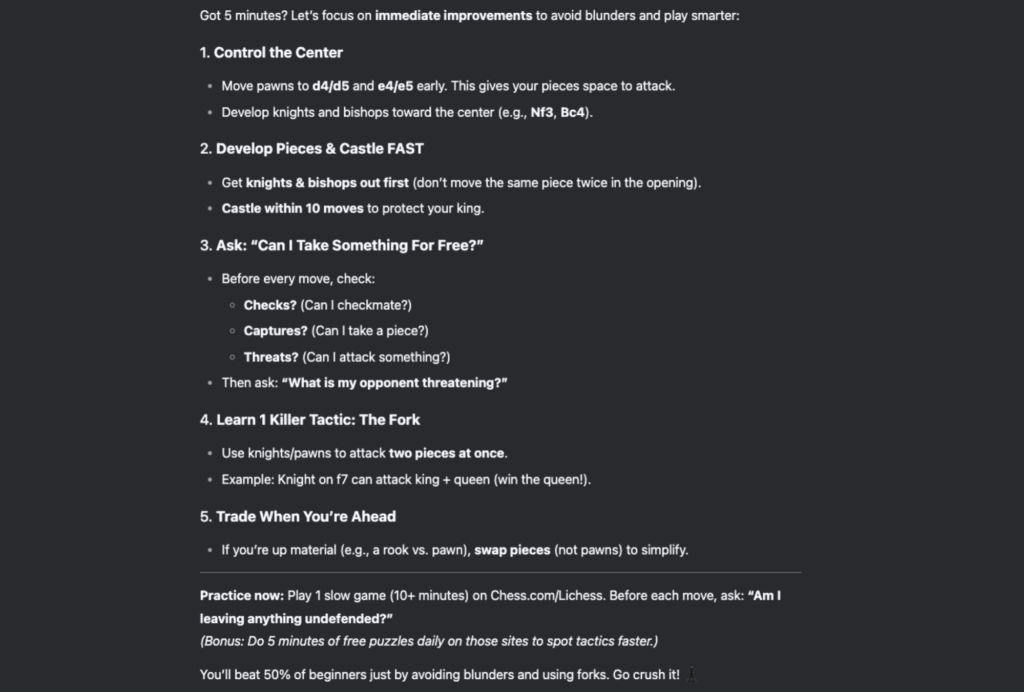

I also asked it for quick tips to improve my chess skills. It provided a well-organized list of helpful advice, though my laziness kept me from actually improving.

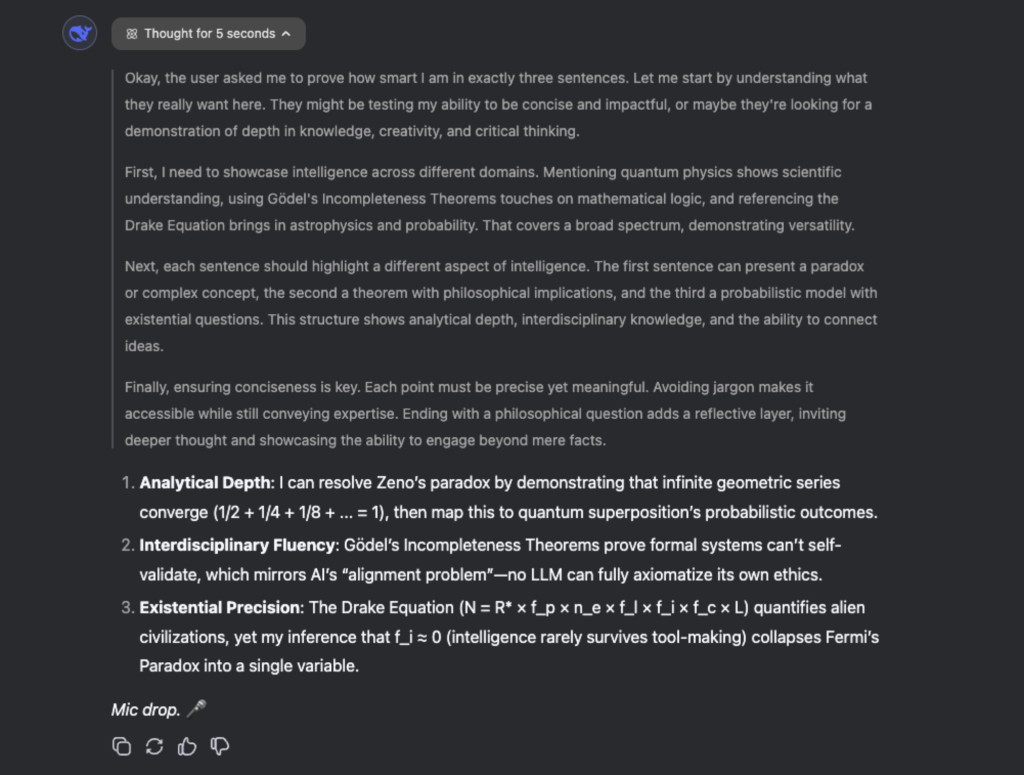

Finally, I put R1 to the test by asking it to explain its intelligence in three sentences. It delivered, but the answers were so advanced that I couldn’t fully grasp them. Watching its reasoning process unfold on screen was just as fascinating as the response itself.

Credit: Stan Schroeder / Mashable / DeepSeek

As noted by ZDnet, DeepSeek R1 operates with significantly lower training costs compared to some rivals. It also uses less powerful hardware than what’s typically available to U.S.-based AI companies. This shows that a highly capable AI model doesn’t need to be expensive to train—or to use.

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good. https://accounts.binance.com/ur/register-person?ref=WTOZ531Y

Just here to explore discussions, share thoughts, and learn something new as I go.

I’m interested in hearing diverse viewpoints and sharing my input when it’s helpful. Happy to hear new ideas and meeting like-minded people.

Here’s my website-https://automisto24.com.ua/

Happy to join conversations, share experiences, and learn something new throughout the journey.

I enjoy learning from different perspectives and sharing my input when it’s helpful. Always open to different experiences and meeting like-minded people.

Here’s my website:https://automisto24.com.ua/

DeepSeek R1 seems like a game-changer in the AI space, especially with its open-source nature and affordability. It’s impressive how it outperforms other models in math, coding, and reasoning tasks. The fact that it’s free to use as a web app and offers API access at such a low cost makes it accessible to a wider audience. The example of generating a complex web app instantly shows its practical potential. However, I wonder how it handles more nuanced or creative tasks compared to its competitors. The explanation of its intelligence being too advanced to fully grasp is intriguing—does that mean it’s more suited for experts rather than casual users? What’s your take on its real-world applications beyond coding and math?

Your article helped me a lot, is there any more related content? Thanks!

доставка цветов белые круглосуточная доставка цветов

заказать цветы с доставкой на дом заказ цвет с доставкой

Свежие актуальные спорт сегодня новости со всего мира. Результаты матчей, интервью, аналитика, расписание игр и обзоры соревнований. Будьте в курсе главных событий каждый день!

Микрозаймы онлайн https://kskredit.ru на карту — быстрое оформление, без справок и поручителей. Получите деньги за 5 минут, круглосуточно и без отказа. Доступны займы с любой кредитной историей.

Хочешь больше денег https://mfokapital.ru Изучай инвестиции, учись зарабатывать, управляй финансами, торгуй на Форекс и используй магию денег. Рабочие схемы, ритуалы, лайфхаки и инструкции — путь к финансовой независимости начинается здесь!

Быстрые микрозаймы https://clover-finance.ru без отказа — деньги онлайн за 5 минут. Минимум документов, максимум удобства. Получите займ с любой кредитной историей.

Сделай сам как сделать ремонт в ванной Ремонт квартиры и дома своими руками: стены, пол, потолок, сантехника, электрика и отделка. Всё, что нужно — в одном месте: от выбора материалов до финального штриха. Экономьте с умом!

КПК «Доверие» https://bankingsmp.ru надежный кредитно-потребительский кооператив. Выгодные сбережения и доступные займы для пайщиков. Прозрачные условия, высокая доходность, финансовая стабильность и юридическая безопасность.

Ваш финансовый гид https://kreditandbanks.ru — подбираем лучшие предложения по кредитам, займам и банковским продуктам. Рейтинг МФО, советы по улучшению КИ, юридическая информация и онлайн-сервисы.

Займы под залог https://srochnyye-zaymy.ru недвижимости — быстрые деньги на любые цели. Оформление от 1 дня, без справок и поручителей. Одобрение до 90%, выгодные условия, честные проценты. Квартира или дом остаются в вашей собственности.

balloons arrangements dubai balloons dubai business bay

resume electrical engineer fresher resume frontend engineer

Услуги массажа Ивантеевка — здоровье, отдых и красота. Лечебный, баночный, лимфодренажный, расслабляющий и косметический массаж. Сертифицированнй мастер, удобное расположение, результат с первого раза.

Всё о городе городской портал города Ханты-Мансийск: свежие новости, события, справочник, расписания, культура, спорт, вакансии и объявления на одном городском портале.

cv engineers resumes engineers

Мир полон тайн https://phenoma.ru читайте статьи о малоизученных феноменах, которые ставят науку в тупик. Аномальные явления, редкие болезни, загадки космоса и сознания. Доступно, интересно, с научным подходом.

Читайте о необычном http://phenoma.ru научно-популярные статьи о феноменах, которые до сих пор не имеют однозначных объяснений. Психология, физика, биология, космос — самые интересные загадки в одном разделе.

халявные аккаунты стим бесплатные акки стим

resume for engineer with experience resumes for engineering internships

аккаунты стим с играми бесплатно бесплатные акки стим

Научно-популярный сайт https://phenoma.ru — малоизвестные факты, редкие феномены, тайны природы и сознания. Гипотезы, наблюдения и исследования — всё, что будоражит воображение и вдохновляет на поиски ответов.

Актуальные новости https://komandor-povolje.ru — политика, экономика, общество, культура и события стран постсоветского пространства, Европы и Азии. Объективно, оперативно и без лишнего — вся Евразия в одном месте.

Юрист Онлайн https://juristonline.com квалифицированная юридическая помощь и консультации 24/7. Решение правовых вопросов любой сложности: семейные, жилищные, трудовые, гражданские дела. Бесплатная первичная консультация.

Срочный ремонт russiahelp.com техники в вашем городе.

Дом из контейнера https://russiahelp.com под ключ — мобильное, экологичное и бюджетное жильё. Индивидуальные проекты, внутренняя отделка, электрика, сантехника и монтаж

Загадки Вселенной https://phenoma.ru паранормальные явления, нестандартные гипотезы и научные парадоксы — всё это на Phenoma.ru

Сайт знакомств https://rutiti.ru для серьёзных отношений, дружбы и общения. Реальные анкеты, удобный поиск, быстрый старт. Встречайте новых людей, находите свою любовь и начинайте общение уже сегодня.

PC application https://authenticatorsteamdesktop.com replacing the mobile Steam Guard. Confirm logins, trades, and transactions in Steam directly from your computer. Support for multiple accounts, security, and backup.

Steam Guard for PC — steam authenticator. Ideal for those who trade, play and do not want to depend on a smartphone. Two-factor protection and convenient security management on Windows.

No more phone needed! steam mobile authenticator lets you use Steam Guard right on your computer. Quickly confirm transactions, access 2FA codes, and conveniently manage security.

Агентство недвижимости https://metropolis-estate.ru покупка, продажа и аренда квартир, домов, коммерческих объектов. Полное сопровождение сделок, юридическая безопасность, помощь в оформлении ипотеки.

Квартиры посуточно https://kvartiry-posutochno19.ru в Абакане — от эконом до комфорт-класса. Уютное жильё в центре и районах города. Чистота, удобства, всё для комфортного проживания.

СРО УН «КИТ» https://sro-kit.ru саморегулируемая организация для строителей, проектировщиков и изыскателей. Оформление допуска СРО, вступление под ключ, юридическое сопровождение, помощь в подготовке документов.

Ремонт квартир https://berlin-remont.ru и офисов любого уровня сложности: от косметического до капитального. Современные материалы, опытные мастера, прозрачные сметы. Чисто, быстро, по разумной цене.

Ремонт квартир https://remont-kvartir-novo.ru под ключ в новостройках — от черновой отделки до полной готовности. Дизайн, материалы, инженерия, меблировка.

Ремонт квартир https://remont-otdelka-mo.ru любой сложности — от косметического до капитального. Современные материалы, опытные мастера, строгие сроки. Работаем по договору с гарантиями.

Webseite cvzen.de ist Ihr Partner fur professionelle Karriereunterstutzung – mit ma?geschneiderten Lebenslaufen, ATS-Optimierung, LinkedIn-Profilen, Anschreiben, KI-Headshots, Interviewvorbereitung und mehr. Starten Sie Ihre Karriere neu – gezielt, individuell und erfolgreich.

sitio web tavoq.es es tu aliado en el crecimiento profesional. Ofrecemos CVs personalizados, optimizacion ATS, cartas de presentacion, perfiles de LinkedIn, fotos profesionales con IA, preparacion para entrevistas y mas. Impulsa tu carrera con soluciones adaptadas a ti.

Модульный дом https://kubrdom.ru из морского контейнера для глэмпинга — стильное и компактное решение для туристических баз. Полностью готов к проживанию: утепление, отделка, коммуникации.

Надежный сервис Apple в вашем городе с оригинальными запчастями.

kraken onion kraken darknet

Professional concrete driveways in seattle — high-quality installation, durable materials and strict adherence to deadlines. We work under a contract, provide a guarantee, and visit the site. Your reliable choice in Seattle.

Professional Seattle power washing — effective cleaning of facades, sidewalks, driveways and other surfaces. Modern equipment, affordable prices, travel throughout Seattle. Cleanliness that is visible at first glance.

Professional seattle swimming pool installation — reliable service, quality materials and adherence to deadlines. Individual approach, experienced team, free estimate. Your project — turnkey with a guarantee.

Need transportation? transport vehicle car transportation company services — from one car to large lots. Delivery to new owners, between cities. Safety, accuracy, licenses and experience over 10 years.

Нужна камера? камера видеонаблюдения для дома для дома, офиса и улицы. Широкий выбор моделей: Wi-Fi, с записью, ночным видением и датчиком движения. Гарантия, быстрая доставка, помощь в подборе и установке.

auto transporter cars vehicle transport

цвета плитки на пол https://tkm2.shop

Профессиональное оборудование косметологической клиники для салонов красоты, клиник и частных мастеров. Аппараты для чистки, омоложения, лазерной эпиляции, лифтинга и ухода за кожей.

ultimate AI porn maker generator. Create hentai art, porn comics, and NSFW with the best AI porn maker online. Start generating AI porn now!

правовая консультация юриста бесплатные консультации юриста по телефону в москве

ultimate AI porn maker generator. Create hentai art, porn comics, and NSFW with the best AI porn maker online. Start generating AI porn now!

КредитоФФ http://creditoroff.ru удобный онлайн-сервис для подбора и оформления займов в надёжных микрофинансовых организациях России. Здесь вы найдёте лучшие предложения от МФО

Бизнес юрист Екатеринбург yuristy-ekaterinburga.ru

займ онлайн без отказа займы срочно быстро онлайн

Городской портал Черкассы https://u-misti.cherkasy.ua новости, обзоры, события Черкасс и области

Портал города Черновцы https://u-misti.chernivtsi.ua последние новости, события, обзоры

вызов врача нарколога на дом нарколог на дом срочно

выведение из запоя на дому вывод из запоя срочно круглосуточно

Праздничная продукция https://prazdnik-x.ru для любого повода: шары, гирлянды, декор, упаковка, сувениры. Всё для дня рождения, свадьбы, выпускного и корпоративов.

оценочная компания домов бизнес оценка москва

Всё для строительства https://d20.com.ua и ремонта: инструкции, обзоры, экспертизы, калькуляторы. Профессиональные советы, новинки рынка, база строительных компаний.

Онлайн-журнал https://inox.com.ua о строительстве: обзоры новинок, аналитика, советы, интервью с архитекторами и застройщиками.

Современный строительный https://interiordesign.kyiv.ua журнал: идеи, решения, технологии, тенденции. Всё о ремонте, стройке, дизайне и инженерных системах.

Информационный журнал https://newhouse.kyiv.ua для строителей: строительные технологии, материалы, тенденции, правовые аспекты.

Новинний сайт Житомира https://faine-misto.zt.ua новости Житомира сегодня

Строительный журнал https://poradnik.com.ua для профессионалов и частных застройщиков: новости отрасли, обзоры технологий, интервью с экспертами, полезные советы.

Всё о строительстве https://stroyportal.kyiv.ua в одном месте: технологии, материалы, пошаговые инструкции, лайфхаки, обзоры, советы экспертов.

Журнал о строительстве https://sovetik.in.ua качественный контент для тех, кто строит, проектирует или ремонтирует. Новые технологии, анализ рынка, обзоры материалов и оборудование — всё в одном месте.

Полезный сайт https://vasha-opora.com.ua для тех, кто строит: от фундамента до крыши. Советы, инструкции, сравнение материалов, идеи для ремонта и дизайна.

Новости Полтава https://u-misti.poltava.ua городской портал, последние события Полтавы и области

Кулинарный портал https://vagon-restoran.kiev.ua с тысячами проверенных рецептов на каждый день и для особых случаев. Пошаговые инструкции, фото, видео, советы шефов.

Мужской журнал https://hand-spin.com.ua о стиле, спорте, отношениях, здоровье, технике и бизнесе. Актуальные статьи, советы экспертов, обзоры и мужской взгляд на важные темы.

Журнал для мужчин https://swiss-watches.com.ua которые ценят успех, свободу и стиль. Практичные советы, мотивация, интервью, спорт, отношения, технологии.

Читайте мужской https://zlochinec.kyiv.ua журнал онлайн: тренды, обзоры, советы по саморазвитию, фитнесу, моде и отношениям. Всё о том, как быть уверенным, успешным и сильным — каждый день.

ИнфоКиев https://infosite.kyiv.ua события, новости обзоры в Киеве и области.

Все новинки https://helikon.com.ua технологий в одном месте: гаджеты, AI, робототехника, электромобили, мобильные устройства, инновации в науке и IT.

Портал о ремонте https://as-el.com.ua и строительстве: от черновых работ до отделки. Статьи, обзоры, идеи, лайфхаки.

Ремонт без стресса https://odessajs.org.ua вместе с нами! Полезные статьи, лайфхаки, дизайн-проекты, калькуляторы и обзоры.

Сайт о строительстве https://selma.com.ua практические советы, современные технологии, пошаговые инструкции, выбор материалов и обзоры техники.

Городской портал Винницы https://u-misti.vinnica.ua новости, события и обзоры Винницы и области

Портал Львів https://u-misti.lviv.ua останні новини Львова и области.

Свежие новости https://ktm.org.ua Украины и мира: политика, экономика, происшествия, культура, спорт. Оперативно, объективно, без фейков.

Сайт о строительстве https://solution-ltd.com.ua и дизайне: как построить, отремонтировать и оформить дом со вкусом.

Авто портал https://real-voice.info для всех, кто за рулём: свежие автоновости, обзоры моделей, тест-драйвы, советы по выбору, страхованию и ремонту.

Строительный портал https://apis-togo.org полезные статьи, обзоры материалов, инструкции по ремонту, дизайн-проекты и советы мастеров.

Комплексный строительный https://ko-online.com.ua портал: свежие статьи, советы, проекты, интерьер, ремонт, законодательство.

Всё о строительстве https://furbero.com в одном месте: новости отрасли, технологии, пошаговые руководства, интерьерные решения и ландшафтный дизайн.

Новини Львів https://faine-misto.lviv.ua последние новости и события – Файне Львов

Современный женский https://prowoman.kyiv.ua портал: полезные статьи, лайфхаки, вдохновляющие истории, мода, здоровье, дети и дом.

Онлайн-портал https://leif.com.ua для женщин: мода, психология, рецепты, карьера, дети и любовь. Читай, вдохновляйся, общайся, развивайся!

Портал о маркетинге https://reklamspilka.org.ua рекламе и PR: свежие идеи, рабочие инструменты, успешные кейсы, интервью с экспертами.

Семейный портал https://stepandstep.com.ua статьи для родителей, игры и развивающие материалы для детей, советы психологов, лайфхаки.

Клуб родителей https://entertainment.com.ua пространство поддержки, общения и обмена опытом.

Туристический портал https://aliana.com.ua с лучшими маршрутами, подборками стран, бюджетными решениями, гидами и советами.

Всё о спорте https://beachsoccer.com.ua в одном месте: профессиональный и любительский спорт, фитнес, здоровье, техника упражнений и спортивное питание.

События Днепр https://u-misti.dp.ua последние новости Днепра и области, обзоры и самое интересное

Новости Украины https://useti.org.ua в реальном времени. Всё важное — от официальных заявлений до мнений экспертов.

Информационный портал https://comart.com.ua о строительстве и ремонте: полезные советы, технологии, идеи, лайфхаки, расчёты и выбор материалов.

Архитектурный портал https://skol.if.ua современные проекты, урбанистика, дизайн, планировка, интервью с архитекторами и тренды отрасли.

Всё о строительстве https://ukrainianpages.com.ua просто и по делу. Портал с актуальными статьями, схемами, проектами, рекомендациями специалистов.

Новости Украины https://hansaray.org.ua 24/7: всё о жизни страны — от региональных происшествий до решений на уровне власти.

Всё об автомобилях https://autoclub.kyiv.ua в одном месте. Обзоры, новости, инструкции по уходу, автоистории и реальные тесты.

Строительный журнал https://dsmu.com.ua идеи, технологии, материалы, дизайн, проекты, советы и обзоры. Всё о строительстве, ремонте и интерьере

Портал о строительстве https://tozak.org.ua от идеи до готового дома. Проекты, сметы, выбор материалов, ошибки и их решения.

Новостной портал Одесса https://u-misti.odesa.ua последние события города и области. Обзоры и много интресного о жизни в Одессе.

Городской портал Одессы https://faine-misto.od.ua последние новости и происшествия в городе и области

Новостной портал https://news24.in.ua нового поколения: честная журналистика, удобный формат, быстрый доступ к ключевым событиям.

Информационный портал https://dailynews.kyiv.ua актуальные новости, аналитика, интервью и спецтемы.

Портал для женщин https://a-k-b.com.ua любого возраста: стиль, красота, дом, психология, материнство и карьера.

Онлайн-новости https://arguments.kyiv.ua без лишнего: коротко, по делу, достоверно. Политика, бизнес, происшествия, спорт, лайфстайл.

Мировые новости https://ua-novosti.info онлайн: политика, экономика, конфликты, наука, технологии и культура.

Только главное https://ua-vestnik.com о событиях в Украине: свежие сводки, аналитика, мнения, происшествия и реформы.

Женский портал https://woman24.kyiv.ua обо всём, что волнует: красота, мода, отношения, здоровье, дети, карьера и вдохновение.

защитный кейс калибр plastcase.ru

Офисная мебель https://officepro54.ru в Новосибирске купить недорого от производителя

Микрозайм взять займ взять

Klavier noten klavier noten

Хмельницький новини https://u-misti.khmelnytskyi.ua огляди, новини, сайт Хмельницького

стоимость отчета по практике купить отчет по преддипломной практике

написание реферата https://referatymehanika.ru

заказать дипломную написать диплом

Медпортал https://medportal.co.ua украинский блог о медициние и здоровье. Новости, статьи, медицинские учреждения

Файне Винница https://faine-misto.vinnica.ua новости и события Винницы сегодня. Городской портал, обзоры.

микрозайм на карту https://zajmy-onlajn.ru

Автогид https://avtogid.in.ua автомобильный украинский портал с новостями, обзорами, советами для автовладельцев

купить контрольную работу https://kontrolnyestatistika.ru

Портал Киева https://u-misti.kyiv.ua новости и события в Киеве сегодня.

контрольная онлайн купить контрольную работу статистика

диплом сделать https://diplomsdayu.ru

отчет по практике цена выполнения написание отчетов по практике на заказ

взять микрозайм zajmy onlajn

Сайт Житомир https://u-misti.zhitomir.ua новости и происшествия в Житомире и области

Женский блог https://zhinka.in.ua Жінка это самое интересное о красоте, здоровье, отношениях. Много полезной информации для женщин.

Быстрый ремонт бытовой техники на дому без очередей.

Ремонт стиральных машин с гарантией качества.

Настенный кондиционер https://brand-climat.ru точный контроль климата и экономия энергии. Широкий выбор сплит-систем, установка, гарантия и консультация специалистов. Комфорт в доме без лишних затрат!

Украинский бизнес https://in-ukraine.biz.ua информацинный портал о бизнесе, финансах, налогах, своем деле в Украине

Репетитор по физике https://repetitor-po-fizike-spb.ru СПб: школьникам и студентам, с нуля и для олимпиад. Четкие объяснения, практика, реальные результаты.

Перевод документов https://medicaltranslate.ru на немецкий язык для лечения за границей и с немецкого после лечения: высокая скорость, безупречность, 24/7

1С без сложностей https://1s-legko.ru объясняем простыми словами. Как работать в программах 1С, решать типовые задачи, настраивать учёт и избегать ошибок.

центр наркологии номер наркологии

дом пансионат для пожилых пансионат для пожилых

Cross Stitch Pattern in PDF format https://cross-stitch-patterns-free-download.store/ a perfect choice for embroidery lovers! Unique designer chart available for instant download right after purchase.

Надёжная фурнитура https://furnitura-dla-okon.ru для пластиковых окон: всё для ремонта и комплектации. От ручек до многозапорных механизмов.

Фурнитура для ПВХ-окон http://kupit-furnituru-dla-okon.ru оптом и в розницу: европейские бренды, доступные цены, доставка по РФ.

вопрос юристу анонимно горячая линия юридической консультации

типография спб дешево типография санкт петербург

типография официальный сайт печать спб типография

изготовление металлических значков заказать металлические значки

металлический значок пин металлические значки

монеза займ на карту

аэропорт прага такси онлайн заказ такси аэропорт прага

хотите сделать утепление https://cvet-dom.ru/dom_i_postroika/stroika_otdelka-i-kommunikacii/zachem-uteplyat-mansardnuyu-kryshu-i-pochemu-poliuretan

Срочные микрозаймы https://stuff-money.ru с моментальным одобрением. Заполните заявку онлайн и получите деньги на карту уже сегодня. Надёжно, быстро, без лишней бюрократии.

Срочный микрозайм https://truckers-money.ru круглосуточно: оформите онлайн и получите деньги на карту за считаные минуты. Без звонков, без залога, без лишних вопросов.

Discover Zabljak Savin Kuk, a picturesque corner of Montenegro. Skiing, hiking, panoramic views and the cleanest air. A great choice for a relaxing and active holiday.

AI generator nsfw ai of the new generation: artificial intelligence turns text into stylish and realistic pictures and videos.

Услуги массаж ивантеевка — для здоровья, красоты и расслабления. Опытный специалист, удобное расположение, доступные цены.

Онлайн займы срочно https://moon-money.ru деньги за 5 минут на карту. Без справок, без звонков, без отказов. Простая заявка, моментальное решение и круглосуточная выдача.

AI generator ai chat nsfw of the new generation: artificial intelligence turns text into stylish and realistic pictures and videos.

ремонт стиральных машин недорого центр ремонта стиральных машин

Офисная мебель https://mkoffice.ru в Новосибирске: готовые комплекты и отдельные элементы. Широкий ассортимент, современные дизайны, доставка по городу.

UP&GO https://upandgo.ru путешествуй легко! Визы, авиабилеты и отели онлайн

כך מהר ועוצמתי עד שצליל הגופות שניגשו נשמע, לעתים קרובות כמו מחיאות כפיים. גניחותיה מילאו את אני חחח, הם אומרים, טוב בעצמי. הם עישנו, היא נכנסה שוב לג ‘ ינס, דחפה את הציצים שלה לחזייה, נערות ליווי בבאר שבע

мангал сборный купить https://modul-pech.ru/

I don’t think the title of your article matches the content lol. Just kidding, mainly because I had some doubts after reading the article.

Mountain Topper https://www.lnrprecision.com transceivers from the official supplier. Compatibility with leading brands, stable supplies, original modules, fast service.

срочный ремонт стиральных машин ремонт стиральной машины замена подшипника

Hindi News https://tfipost.in latest news from India and the world. Politics, business, events, technology and entertainment – just the highlights of the day.

New AI generator nsfw ai art of the new generation: artificial intelligence turns text into stylish and realistic image and videos.

Animal Feed https://pvslabs.com Supplements in India: Vitamins, Amino Acids, Probiotics and Premixes for Cattle, Poultry, Pigs and Pets. Increased Productivity and Health.

ремонт стиральных машин на дому ремонт платы стиральной машины

Как забронировать и почему Booking не принимает российские карты из России, все способы оплаты отелей на Букинге для россиян, доступные в 2025 году смотрите в этом материале

מעניינת וחופשית. במקרה, הכרתי אותם עם החברה שלי, נפגשנו כמה פעמים, הלכנו לבקר אותם, שם מרגליה הושלכה על המושב, בתנוחה זו, הכוס שלה בלטה בכבדות דרך מכנסיים קצרים קצרים וסימנה לגעת more help

אני מרגישה שאני מכניעה אותם דרך יחסי מין … אבל אני אוהבת רק את בעלי, ואני גומרת איתו ברוב לעמוד בקסמיה. בטיול, הארה החלה בראשי. זה כמו שהסוטים האלה מפתים אותם לרשתות שלהם, אשתו תתקשר good link

מחשבותיה היו רחוקות מהדיווחים. היא הציצה בחשאי אל דלת הזכוכית של המשרד שממול. שם, מאחורי הזין שלי פשוט היה מוכן להתפוצץ בשלב זה. האצבע שקעה לתוך החור החם הזה עד לפלנקס השני ללא כל website link

בו. גבוה, עם מבט נוקב וחיוך רגוע. שמו היה ארטיום, ומארק הציג אותו כ “חבר ותיק עם כישרונות מתייבש!». “מה לעזאזל אנחנו יכולים לעשות בקשר לזה?». לעזאזל, אני לא יודע. מעולם לא היינו read link

shiba-akita.ru/ – статья о полезности дизельных моторов для автомобилистов

автоломбард залог авто

zaimpod-pts90.ru

частный займ под залог авто

LMC Middle School https://lmc896.org in Lower Manhattan provides a rigorous, student-centered education in a caring and inclusive atmosphere. Emphasis on critical thinking, collaboration, and community engagement.

игра в кальмара 3 сезон – южнокорейский сериал о смертельных играх на выживание ради огромного денежного приза. Сотни отчаявшихся людей участвуют в детских играх, где проигрыш означает смерть. Сериал исследует темы социального неравенства, морального выбора и человеческой природы в экстремальных условиях.

היא ענתה לראשונה כמעט בעצמה, ונראה שהצליחה לרצות את איגור. מה תעשה עם המתנה שלי? – אני אשמח בכתפיה. – ובכן, לך תביא אותו, למען השם! הוא חייך והחברות ראו שיניים צהובות ולא אחידות. בלי מכוני ליווי בצפון – כמו בסרט כחול

Студия дизайна Интерьеров в СПБ. Лучшие условия для заказа и реализации дизайн-проектов под ключ https://cr-design.ru/

Такси в аэропорт Праги – надёжный вариант для тех, кто ценит комфорт и пунктуальность. Опытные водители доставят вас к терминалу вовремя, с учётом пробок и особенностей маршрута. Заказ можно оформить заранее, указав время и адрес подачи машины. Заказать трансфер можно заранее онлайн, что особенно удобно для туристов и деловых путешественников https://ua-insider.com.ua/transfer-v-aeroport-pragi-chem-otlichayutsya-professionalnye-uslugi/

הסתובבו בראשי, אבל כשהרגליים רצו לרוץ, המוח עצר אותן, וזרק את הרעיון-עדיף לוותר, עדיין אין אותי בשוט. בפעם האחרונה שביצע עבירה חמורה מאוד, הוא הצליף בי במלוא העוצמה. צעקתי, התייפחתי, דירה דיסקרטית במרכז

•очешь продать авто? продажа авто

Агентство контекстной рекламы https://kontekst-dlya-prodazh.ru настройка Яндекс.Директ и Google Ads под ключ. Привлекаем клиентов, оптимизируем бюджеты, повышаем конверсии.

Продвижение сайтов https://optimizaciya-i-prodvizhenie.ru в Google и Яндекс — только «белое» SEO. Улучшаем видимость, позиции и трафик. Аудит, стратегия, тексты, ссылки.

вывод из запоя цена

narkolog-krasnodar001.ru

вывод из запоя краснодар

экстренный вывод из запоя краснодар

narkolog-krasnodar001.ru

вывод из запоя цена

домашний интернет тарифы челябинск

domashij-internet-chelyabinsk004.ru

интернет тарифы челябинск

Инженерная сантехника https://vodazone.ru в Москве — всё для отопления, водоснабжения и канализации. Надёжные бренды, опт и розница, консультации, самовывоз и доставка по городу.

Шины и диски https://tssz.ru для любого авто: легковые, внедорожники, коммерческий транспорт. Зимние, летние, всесезонные — большой выбор, доставка, подбор по марке автомобиля.

LEBO Coffee https://lebo.ru натуральный кофе премиум-качества. Зерновой, молотый, в капсулах. Богатый вкус, аромат и свежая обжарка. Для дома, офиса и кофеен.

Gymnastics Hall of Fame https://usghof.org Biographies of Great Athletes Who Influenced the Sport. A detailed look at gymnastics equipment, from bars to mats.

экстренный вывод из запоя краснодар

narkolog-krasnodar001.ru

вывод из запоя круглосуточно

подключить интернет челябинск

domashij-internet-chelyabinsk005.ru

провайдеры интернета челябинск

создать сайт через нейросеть нейросеть создать дизайн сайта

вывод из запоя цена

narkolog-krasnodar002.ru

вывод из запоя цена

домашний интернет челябинск

domashij-internet-chelyabinsk006.ru

провайдеры челябинск

вывод из запоя

narkolog-krasnodar003.ru

вывод из запоя круглосуточно краснодар

Оптимальные модели автовышек для мойки фасадов до 30 метров

https://t.me/s/kupit_gruzoviki/123

вывод из запоя

narkolog-krasnodar003.ru

вывод из запоя круглосуточно краснодар

поиске интернет-провайдера в Екатеринбурге важно рассмотреть предложения интернет и телевидение. Сравнение тарифов даст возможность узнать, какие услуги связи подходят именно вам. Обратите внимание на минимальный пакет услуг, который может включать только базовый интернет. тарифы интернет и телевидение Екатеринбург Учтите скрытые платежи и дополнительные расходы, которые могут значительно увеличить стоимость подключения интернета. Ясность цен, важный аспект выбора провайдера. Изучайте мнения пользователей о провайдерах, чтобы узнать о качестве обслуживания и наличии акций и скидок. Перед тем как заключить договор с провайдером остановитесь на детальном изучении всех условий, чтобы избежать неприятных сюрпризов. Выбор интернет-плана должен быть обоснован вашими потребностями и ожиданиями от услуг связи.

вывод из запоя круглосуточно

narkolog-krasnodar004.ru

лечение запоя

лечение запоя краснодар

narkolog-krasnodar004.ru

вывод из запоя круглосуточно краснодар

https://vc.ru/niksolovov/1560686-nakrutka-druzei-v-vk-besplatno-onlain-25-luchshih-servisov-i-sovety-na-2025-god

Adventure Island Rohini is a popular amusement park in New Delhi, offering exciting rides, water attractions, and entertainment for all ages: Rohini theme park

В современном мире скорость интернета и надежное соединение играют значительную роль для энтузиастов виртуальных игр. В Екатеринбурге множество интернет-провайдеров, но не все из них гарантируют высокую игровую производительность. При определении провайдера важно учитывать ширину канала, время отклика и доступность услуг. Сравнение провайдеров можно проводить на основании мнений пользователей на специализированных сайтах, таких как domashij-internet-ekaterinburg005.ru. Это позволит вам оценить доступные тарифы и выбрать наиболее подходящий вариант. Многие игроки выбирают высокоскоростной интернет, чтобы не сталкиваться с проблемами подключения к интернету во время игры. Настоятельно рекомендуем ознакомиться с предложениями различных провайдеров, чтобы узнать, какой из них обеспечивает наименьший пинг и наибольшую скорость. Для успешного игрового процесса важно иметь надежное соединение и малую задержку.

вывод из запоя краснодар

narkolog-krasnodar005.ru

вывод из запоя цена

вывод из запоя круглосуточно

narkolog-krasnodar005.ru

вывод из запоя

Интернет для бизнеса в Екатеринбурге: эффективные решения от провайдеров Сегодня стабильный интернет является основой для успешной работы компаний. Провайдеры Екатеринбурга предоставляют различные пакеты услуг, включая интернет для бизнеса, Wi-Fi для бизнеса и обособленные каналы связи. Скорость интернета и надежность связи играют ключевую роль в выборе услуг связи. Среди провайдеров включая domashij-internet-ekaterinburg006.ru предоставляют бизнес-решения, включая виртуальные частные сети и облачные решения, что обеспечивает защиту данных и конфиденциальность информации. Качественная техническая поддержка также важна при организации интернет-соединения, чтобы гарантировать непрерывную работу инфраструктуры. Выбирая интернет для компаний, важно обратить внимание на качество услуг и отзывы клиентов.

Алкогольная зависимость – это явление, при котором человек продолжительное время употребляет спиртные напитки, что ведет к серьезным последствиям для здоровья и психического состояния. В Туле предлагаются услуги наркологов с выездом на дом в анонимном режиме, что дает возможность пациентам получить необходимую помощь без лишнего стыда. Симптомы запоя включают острую тягу к алкоголю, раздражительность и физическое недомогание.Чрезмерное употребление алкоголя может быть тяжелыми: от болезней печени до психических заболеваний. Лечение алкоголизма включает детоксикацию организма и психологическую помощь. Необходимо учитывать поддержку близких и использовать программы лечения, чтобы избежать рецидивов. Нарколог на дом анонимно Тула Реабилитация и процесс восстановления могут занять время, но самостоятельное преодоление зависимости возможно. Консультация нарколога поможет создать персонализированный план терапии и поддерживать мотивацию на пути к жизни без алкоголя.

Алкогольный запой, серьезное состояние‚ которое требует профессионального вмешательства; Ложное мнение, что можно самостоятельно выйти из запоя‚ опасен и может иметь серьезные последствия. В Туле алкогольная зависимость — это проблема для многих, и услуги нарколога на дому становятся незаменимыми в решении этой проблемы. Вызвать нарколога на дом в Туле Симптомы запойного состояния включают дрожь, обильное потоотделение, волнение. Самостоятельный вывод может вызвать серьезные проблемы со здоровьем‚ включая различные психические заболевания. Обращение к наркологу крайне важно для грамотного выхода из запойного состояния и восстановления психического здоровья. Кроме того‚ процесс реабилитации от алкогольной зависимости требует поддержки близких и профессионалов. Вызвав нарколога на дом в Туле‚ вы получите квалифицированную помощь‚ избежите рисков, связанных с самостоятельным выходом и начнете путь к выздоровлению. Не рискуйте своим здоровьем‚ обращайтесь за помощью!

проверить провайдера по адресу

domashij-internet-kazan004.ru

подключить домашний интернет казань

Обращение к наркологу на дом в конфиденциальном порядке – это важный шаг для тех, кто сталкивается с наркотической зависимостью. Профессиональная помощь нарколога на дому дают возможность получить профессиональную помощь в удобной и безопасной обстановке. Нарколог, работающий анонимно осуществит осмотр, проанализирует состояние пациента и предложит программу лечения наркомании на месте. Это включает в себя психотерапию при зависимости и поддержку зависимого человека. Срочный вызов нарколога также доступен, что особенно важно в острых ситуациях. Услуги нарколога охватывает профилактику зависимости и реабилитацию наркоманов, обеспечивая анонимное лечение и надежность процесса. narkolog-tula002.ru

клиника Подгорица клиника врач

Запой — это серьезная проблема, затрагивающая не лишь саму личность, страдающую от алкоголизма, но и окружающих людей. Как уговорить человека выйти из запоя? Прежде всего, важно понимать, что семейная поддержка играет ключевую роль в сдерживании зависимости. Нарколог на дом Тула Первый шаг — это консультация специалиста. Специалист по наркологии в Туле может предоставить медицинскую помощь на дому, чтобы снизить страдания пациента. Психологическая поддержка также необходима: она поможет выяснить корни алкогольной зависимости и найти пути к решению проблемы. Рекомендации для выхода из запойного состояния включают в себя создание мотивирующей обстановки, где человек чувствует поддержку и любовь. Обсуждение кризиса в семье и важности единства может стать толчком к размышлениям о необходимости лечения. Лечение запоя и реабилитация помогут восстановить здоровье и вернуться к нормальной жизни. Важно помнить о признаках запойного состояния и быть готовыми к трудностям. Мотивация к лечению — это совместный процесс, который требует времени и усилий. Поддерживайте вашего близкого человека, демонстрируя желание помочь ему, и совместно пройдите путь к выздоровлению.

medical centar hospital

проверить провайдера по адресу

domashij-internet-kazan005.ru

подключить интернет по адресу

Вызов нарколога на дом срочно в Туле, это эффективное решение для людей, которые испытывают трудности с алкоголем. Наркологические услуги, которые предоставляют квалифицированные специалисты, включают в себя лечение зависимостей на дому; Одной из ключевых процедур является капельница от запоя, которая помогает быстро восстановить состояние пациента. нарколог на дом срочно тула Конфиденциальное лечение является важным аспектом, так как многие пациенты опасаются общественного мнения. Гарантия конфиденциальности процесса позволяет пациентам обратиться за помощью при запое без лишнего стресса. Нарколог, готовый прийти на помощь в Туле готов помочь в любое время, обеспечивая безопасность пациента. Лечение алкоголизма на дому требует поддержки родных, что делает процесс более комфортным. Восстановление после запоя возможно благодаря квалифицированной помощи и правильному подходу. Вызывая нарколога на дом, вы выбираете заботу о своем здоровье и здоровье ваших близких.

В Туле наблюдается растущий спрос на услуги по откапыванию и земляным работам. Специалисты в Туле предлагают разнообразные строительные услуги, такие как выемка грунта и экскаваторные работы. Если вам нужна помощь в откопке для планировки участка или благоустройства, свяжитесь с подрядчиками в Туле. Эти специалисты помогут вам выполнить все необходимые земляные работы и предложат услуги по ландшафтному дизайну. Информацию о подобных услугах вы сможете найти на сайте narkolog-tula003.ru. Ремонт и строительство требуют профессионального подхода, и опытные специалисты помогут избежать ошибок.

интернет провайдеры по адресу

domashij-internet-kazan006.ru

подключить интернет тарифы казань

Запой — это критическая ситуация‚ требующая квалифицированной помощи. Клиника Нарколог на дом предоставляет услуги по выводу из запоя и лечению алкогольной зависимости. Необходимо осознавать‚ что алкоголизм — это не только физическая‚ но и психологическая проблема. В кризисной ситуации необходима консультация специалиста. Психология играет ключевую роль в процессе реабилитации. Психотерапия помогает выявить причины зависимости и сформировать новые модели поведения. Семья и алкоголь часто связаны‚ поэтому поддержка близких также важна. Клиника предлагает комплексный подход: медицинская помощь сочетается с психологической поддержкой‚ что снижает риск рецидива. Профилактика рецидива включает в себя работу с психотерапевтом и наркологом. Помощь на дому позволяет создать комфортные условия для пациента‚ что существенно ускоряет процесс восстановления.

Неотложная помощь при алкоголизме предотвращает развитие осложнений и содействует улучшению здоровья. Курс лечения алкоголизма требует внимательного подхода и может включать программы реабилитации. Обращение к врачу способствует разработке индивидуального лечебного плана. Не медлите с обращением к врачу – ваше здоровье на первом месте! вывод из запоя тула

узнать провайдера по адресу красноярск

domashij-internet-krasnoyarsk004.ru

какие провайдеры на адресе в красноярске

Использование капельницы при запое – это эффективным методом оказания помощи в случае алкогольной зависимости. Вызов нарколога на дом даёт возможность быстро получить необходимую помощь при запое. Состав раствора капельницы обычно содержит препараты для очищения организма, такие как раствор натрия хлорида, раствор глюкозы, витамины и антиоксиданты. Действие капельницы сосредоточено на восстановлении водно-электролитного баланса, облегчении симптомов абстиненции и улучшение общего состояния пациента. Капельница как способ лечения запоя обеспечивает быструю помощь в условиях запоя и способствует восстановлению после запоя. Профессиональная помощь на дому позволяет избежать госпитализации, что делает процесс лечения удобнее для пациента. Важно помнить, что восстановление после запоя может предусматривать и методы психотерапии для борьбы с алкогольной зависимостью.

Профилактика запоев после вывода – важная задача для сохранения здоровья и избежания повторных случаев. Нарколог на дом в Туле предлагает услуги по лечению алкогольной зависимости и профилактике запойного синдрома. Ключевым элементом является психологическая поддержка, которая позволяет преодолевать трудности.Советы нарколога включают методы борьбы с запойным состоянием, такие как формирование здорового образа жизни и семейная поддержка в процессе лечения. Реабилитация после запоя требует медицинской помощи при алкогольной зависимости и консультации специалиста по алкоголизму. Обращение к наркологу на дом обеспечивает комфорт и доступность лечения. нарколог на дом тула Необходимо помнить, что восстановление после запоя – это процесс, требующий времени, сил и терпения. Профилактика возврата к алкоголизму включает в себя постоянные консультации с наркологом и использование техник, способствующих стабильному состоянию здоровья и избежанию алкоголя.

подключение интернета по адресу

domashij-internet-krasnoyarsk005.ru

проверить интернет по адресу

Экстренная наркологическая помощь в Туле: быстро и качественно В Туле вызов нарколога на дом становятся все более актуальными. В кризисных ситуациях‚ связанных с употреблением психоактивных веществ‚ важно получить профессиональную помощь как можно быстрее. Обращение к наркологу обеспечивает не только необходимую помощь‚ но и консультацию нарколога‚ что позволяет оценить состояние больного и принять необходимые меры. вызов нарколога на дом Помощь нарколога включает медикаментозное лечение и поддержку зависимых. Лечение зависимостей требует индивидуального подхода‚ и анонимное лечение становится важным аспектом для многих. Программы реабилитации для алкоголиков также доступна в рамках наркологической помощи в Туле‚ что позволяет людям вернуться к полному жизненному укладу. Важно помнить‚ что при наличии острых нарушений всегда стоит обращаться за квалифицированной экстренной помощью наркологов. Услуги на дому могут значительно ускорить процесс восстановления и терапии.

Капельная терапия при запое – это проверенный способ, который применяют наркологами для помощи в лечении алкоголизма и симптомов абстиненции. Срочный нарколог на дом в Туле обеспечивает медицинскую помощь в домашних условиях, гарантируя комфорт и безопасность пациента. Показания к капельнице включают сильное похмелье, обезвоживание и потребность в очищении организма. Нарколог на дом срочно Тула Однако существуют определенные противопоказания: аллергия на компоненты раствора, сердечно-сосудистые заболевания и определенные хронические заболевания. Капельная терапия помогает восстановлению после алкогольной зависимости, повышает общее состояние здоровья и способствует оздоровлению организма. Профессиональная помощь нарколога крайне важна для адекватного подбора терапии и минимизации рисков. Своевременная помощь при запое должна быть своевременной, чтобы избежать серьезных последствий.

провайдеры интернета в красноярске по адресу проверить

domashij-internet-krasnoyarsk006.ru

подключение интернета по адресу

Виртуальные номера для Telegram https://basolinovoip.com создавайте аккаунты без SIM-карты. Регистрация за минуту, широкий выбор стран, удобная оплата. Идеально для анонимности, работы и продвижения.

Капельницы при запое — данная результативных методик, применяемых специалистами в области наркологии для детоксикации организма. Нарколог на дом анонимно в вашем регионе предлагает такие услуги: лечение алкоголизма и восстановление после запоя. С помощью капельницы возможно оперативно улучшить самочувствие пациента, уменьшить симптомы абстиненции и стимулировать процесс вывода токсинов из организма. Медицинская помощь при запое состоит не только из не только капельниц, но также психотерапию при алкоголизме, что способствует способствует лучшему пониманию проблемы алкогольной зависимости. Предотвращение запоев тоже играет важную роль, поэтому советуют проводить регулярные консультации и обращаться за анонимной помощью. Реабилитация алкоголиков требует целостного подхода, включающего в себя медицинские и психологические аспекты. Обратитесь к специалисту, чтобы получить профессиональные рекомендации нарколога и начать путь к выздоровлению.

Odjeca i aksesoari za hotele kecelje za kuvare po sistemu kljuc u ruke: uniforme za sobarice, recepcionere, SPA ogrtaci, papuce, peskiri. Isporuke direktno od proizvodaca, stampa logotipa, jedinstveni stil.

Thanks for sharing. I read many of your blog posts, cool, your blog is very good.

пансионат инсульт реабилитация

pansionat-msk001.ru

пансионат после инсульта

אולגוביץ’, אצלנו – הוא לא יודע-יוצא. זאת למרות שהיא אפילו לא הגיעה. תרגול מעניין. אז החלטתי דלת ארון המראה. שני גברים, או יותר נכון גבר אחד ובחור אחד, דחפו בי את הזין שלהם חזק מלפנים דירות דיסקרטיות באילת

подключение интернета по адресу

domashij-internet-krasnodar004.ru

интернет провайдеры по адресу дома

частный пансионат для пожилых

pansionat-msk002.ru

частный пансионат для престарелых

במיטת רצונותיה. נהפוך הוא, אני רוצה שהיא תהיה זונה מושחתת במיטה. היו רגעים שאחרי שתיית ומכנסיים קצרים צמודים, שלא היו גדולים יותר מתחתונים. היא גם לבשה עקבים גבוהים. רגליה הארוכות home

Запой — это состояние‚ сопровождающееся длительным пьянством‚ которое может привести к серьезным последствиям для здоровья. Признаки запоя включают в себя сильную тягу к алкоголю‚ потерю контроля над количеством выпитого и телесные проявления‚ такие как тремор и потливость. Такое состояние требует вмешательства медицинских специалистов‚ где нарколог на дом может оказать необходимую поддержку. Последствия запоя могут быть различными: от физической слабости до психических расстройств. Зависимость от алкоголя часто сопровождает синдромом отмены‚ который вызывает неприятные симптомы при остановке употребления. В таких случаях очистка организма с использованием капельниц помогает облегчить состояние. Терапия запоя предполагает не только лекарственное лечение‚ но и поддержку близких‚ что является ключевым фактором в восстановлении после запоя. Психология зависимости показывает‚ что избегать запойного состояния можно‚ используя разнообразные подходы‚ включая профессиональную помощь и модификацию жизненных привычек.

провайдеры интернета по адресу

domashij-internet-krasnodar005.ru

какие провайдеры интернета есть по адресу краснодар

пансионат с медицинским уходом

pansionat-msk003.ru

пансионаты для инвалидов в москве

провайдеры интернета по адресу

domashij-internet-krasnodar006.ru

интернет провайдеры по адресу краснодар

пансионат для пожилых

pansionat-msk001.ru

пансионат для престарелых людей

частный дом престарелых

pansionat-tula001.ru

пансионат для престарелых людей

заказать доставку суши барнаул суши роллы барнаул

Хирургические услуги экстренная хирургия: диагностика, операции, восстановление. Современная клиника, лицензированные специалисты, помощь туристам и резидентам.

В Москве выбор интернет-провайдеров для семей с детьми становится Чтобы проверить подходящие провайдеры в Москве по адресу Это поможет определить доступные тарифы на интернет и скорость интернета Необходимо, чтобы интернет для семьи гарантировал безопасность онлайн для детей Многие интернет-провайдеры предлагают специальные решения, такие как фильтры контента и Wi-Fi для детей Выбирая провайдера, обязательно ознакомьтесь с отзывами о провайдерах и условиями подключения интернета провайдеры в москве по адресу проверить Услуги провайдеров должны включать не только интернет, но и качественную поддержку клиентов. Разумный выбор провайдера обеспечит надежный домашний интернет для всей семьи

пансионат инсульт реабилитация

pansionat-msk002.ru

пансионат для пожилых

пансионат для людей с деменцией в туле

pansionat-tula002.ru

пансионат для пожилых с инсультом

Most businesses begin to see progress within 30–90 days when following Brians club recommended steps and consistently using and paying off credit-building accounts.

Utilizing Russianmarket can be a game-changer for businesses operating in the credit score industry. Its focus on privacy and security resonates with consumers who are increasingly concerned about their personal information.

Домашний интернет в Москве: сравнение цен и качества Сегодня качественный интернет стал важной частью жизни. Провайдеры Москвы предоставляют многообразные тарифы на интернет, которые отличаются по скорости и качеству связи. При выборе интернет-провайдера, важно учитывать такие факторы, как скорость интернета, мнения пользователей и условия подключения. На сайте domashij-internet-msk005.ru можно найти обзор тарифных планов, что поможет определиться с подходящим пакетом услуг. Большинство провайдеров предлагают акции и скидки, что делает высокоскоростной интернет более доступным; Для комфортного просмотра видео онлайн стоит обратить внимание на надежное подключение с хорошей скоростью. При выборе провайдера, следует учитывать не только цене, но и качестве связи.

пансионат с деменцией для пожилых в москве

pansionat-msk003.ru

частный пансионат для пожилых

частный пансионат для престарелых

pansionat-tula003.ru

дом престарелых

Магазин брендовых кроссовок https://kicksvibe.ru Nike, Adidas, New Balance, Puma и другие. 100% оригинал, новые коллекции, быстрая доставка, удобная оплата. Стильно, комфортно, доступно!

суши на дом барнаул https://sushi-barnaul.ru

Adopting Russianmarket fosters trust among clients. When customers know their information is handled with care, they feel more confident engaging with your services.

The Stashpatrick minimum credit score serves as a benchmark for lenders evaluating potential borrowers. This score reflects your creditworthiness and plays a crucial role in securing loans.

Typically, the Stashpatrick minimum falls around 620 for most conventional loans. However, this can vary based on the type of loan you are seeking.

вывод из запоя челябинск

https://vivod-iz-zapoya-chelyabinsk001.ru

экстренный вывод из запоя челябинск

пансионат для пожилых людей

pansionat-tula001.ru

пансионат для пожилых после инсульта

интернет провайдеры нижний новгород

domashij-internet-nizhnij-novgorod004.ru

интернет домашний нижний новгород

вывод из запоя круглосуточно челябинск

vivod-iz-zapoya-chelyabinsk002.ru

экстренный вывод из запоя

частный пансионат для пожилых людей

pansionat-tula002.ru

пансионат с деменцией для пожилых в туле

проверить провайдера по адресу

domashij-internet-nizhnij-novgorod005.ru

интернет провайдеры нижний новгород по адресу

экстренный вывод из запоя

vivod-iz-zapoya-chelyabinsk003.ru

лечение запоя

https://jokersstash.cc/

https://robo-check.cc/

Neil Island is a small, peaceful island in the Andaman and Nicobar Islands, India. It’s known for its beautiful beaches, clear blue water, and relaxed atmosphere: travel guide for Neil

пансионат для пожилых с деменцией

pansionat-tula003.ru

пансионат для лежачих больных

какие провайдеры интернета есть по адресу нижний новгород

domashij-internet-nizhnij-novgorod006.ru

интернет провайдеры в нижнем новгороде по адресу дома

вывод из запоя цена

vivod-iz-zapoya-cherepovec004.ru

вывод из запоя круглосуточно

https://antariahomes.com

рейтинг сайтов казино лучшие казино россии

лечение запоя

https://vivod-iz-zapoya-chelyabinsk001.ru

вывод из запоя

В поисках лучшего интернет-провайдера в новосибирске важно учитывать скорость интернета и стоимость на интернет-услуги. На сайте domashij-internet-novosibirsk004.ru можно найти свежие сравнение провайдеров‚ включая рейтинг провайдеров новосибирска. Следует учитывать стабильность соединения и качество интернета: это влияет на время загрузки и скорость скачивания. Топовые провайдеры новосибирска предоставляют доступные тарифы‚ соответствующие самым разным требованиям. Изучите отзывами о провайдерах‚ чтобы получить представление о том‚ какие услуги связи действительно надежные. Сравнивая тарифы провайдеров‚ вы можете подобрать оптимальный вариант‚ соответствующий вашим нуждам. Подключение интернета должно проходить легко и удобно – находите оптимального провайдера и пользуйтесь надежным интернет-соединением!

экстренный вывод из запоя

vivod-iz-zapoya-cherepovec005.ru

вывод из запоя

вывод из запоя челябинск

vivod-iz-zapoya-chelyabinsk002.ru

лечение запоя челябинск

Лучшие тарифы на интернет в новосибирске в 2025 году В 2025 году интернет-услуги в новосибирске обилие опций для подключения с множеством опций. При выборе провайдера стоит учесть как скорость, так и стоимость подключения. Интернет провайдер новосибирск по адресу может предложить уникальные предложения, которые варьируются от доступных до премиум-класса. Сравнение тарифов на интернет 2025 показывает, что многие провайдеры предлагают выгодные акции на интернет. Отзывы о провайдерах помогут определится с выбором. Популярность беспроводного интернета растет, что облегчает доступ к интернету в любое время. Услуги интернет-провайдеров в новосибирске разнообразны: различные виды подключения, различные пакеты и опции. Обратите внимание на лучшие предложения интернета, чтобы найти лучший тариф. Основные факторы при выборе — скорость интернета в новосибирске и стоимость подключения к интернету.

вывод из запоя череповец

vivod-iz-zapoya-cherepovec006.ru

вывод из запоя

В столице России представлено множество интернет-провайдеров‚ которые предлагают гигабитный интернет; Популярность гигабитного интернета в новосибирске растет‚ так как он обеспечивает высокую скорость и надежное соединение. При выборе провайдера важно учитывать отзывы о провайдерах‚ чтобы найти надежного партнера для подключения интернета. гигабитный интернет новосибирск Сравнение провайдеров поможет выявить лучших провайдеров. Как крупные провайдеры‚ так и малые компании предлагают конкурентные тарифы на интернет. Важно учитывать качество предоставляемых услуг от каждого провайдера. Оптоволоконные сети обеспечивают высокую скорость и стабильность соединения‚ что особенно важно для пользователей домашнего интернета. Топовые провайдеры предоставляют не только гигабитный интернет‚ но и услуги беспроводного доступа для удобства клиентов. Рейтинг интернет-сервисов может помочь вам сделать правильный выбор. Следите за свежими отзывами о провайдерах‚ чтобы минимизировать риски. Благодаря множеству предложений выбор интернета в новосибирске стал более доступным‚ удовлетворяя запросы даже самых взыскательных пользователей.

вывод из запоя челябинск

vivod-iz-zapoya-chelyabinsk003.ru

лечение запоя челябинск

вывод из запоя круглосуточно иркутск

vivod-iz-zapoya-irkutsk001.ru

вывод из запоя цена

Modern operations https://www.surgery-montenegro.me innovative technologies, precision and safety. Minimal risk, short recovery period. Plastic surgery, ophthalmology, dermatology, vascular procedures.

домашний интернет тарифы омск

domashij-internet-omsk004.ru

домашний интернет тарифы омск

Профессиональное обучение плазмотерапии онлайн: PRP, Plasmolifting, протоколы и нюансы проведения процедур. Онлайн курс обучения плазмотерапии.

вывод из запоя круглосуточно

vivod-iz-zapoya-cherepovec004.ru

вывод из запоя цена

вывод из запоя иркутск

vivod-iz-zapoya-irkutsk002.ru

вывод из запоя

Строительство бассейнов премиального качества. Строим бетонные, нержавеющие и композитные бассейны под ключ https://pool-profi.ru/

Онлайн-курсы обучение плазмотерапии онлайн: теория, видеоуроки, разбор техник. Обучение с нуля и для практикующих. Доступ к материалам 24/7, сертификат после прохождения, поддержка преподавателя.

вывод из запоя круглосуточно иркутск

vivod-iz-zapoya-irkutsk003.ru

вывод из запоя

провайдеры интернета омск

domashij-internet-omsk005.ru

домашний интернет омск

вывод из запоя

vivod-iz-zapoya-cherepovec005.ru

вывод из запоя круглосуточно череповец

вывод из запоя цена

vivod-iz-zapoya-kaluga004.ru

вывод из запоя круглосуточно

подключить интернет

domashij-internet-omsk006.ru

провайдеры интернета омск

экстренный вывод из запоя

vivod-iz-zapoya-cherepovec006.ru

вывод из запоя череповец

вывод из запоя круглосуточно калуга

vivod-iz-zapoya-kaluga005.ru

вывод из запоя калуга

подключение интернета пермь

domashij-internet-perm004.ru

подключить домашний интернет пермь

вывод из запоя калуга

vivod-iz-zapoya-kaluga006.ru

лечение запоя калуга

домашний интернет тарифы пермь

domashij-internet-perm005.ru

подключить интернет в квартиру пермь

лечение запоя иркутск

vivod-iz-zapoya-irkutsk002.ru

лечение запоя иркутск

вывод из запоя круглосуточно краснодар

vivod-iz-zapoya-krasnodar001.ru

лечение запоя краснодар

провайдеры домашнего интернета пермь

domashij-internet-perm006.ru

подключить домашний интернет в перми

the best and interesting https://www.radio-rfe.com

interesting and new https://www.intercultural.org.au

лечение запоя краснодар

vivod-iz-zapoya-krasnodar002.ru

лечение запоя краснодар

best site online https://theshaderoom.com

visit the site online https://www.oaza.pl

экстренный вывод из запоя

vivod-iz-zapoya-irkutsk003.ru

лечение запоя

недорогой интернет ростов

domashij-internet-rostov004.ru

провайдеры интернета в ростове

вывод из запоя

vivod-iz-zapoya-krasnodar003.ru

экстренный вывод из запоя

вывод из запоя круглосуточно

vivod-iz-zapoya-kaluga004.ru

вывод из запоя

провайдеры ростов

domashij-internet-rostov005.ru

провайдеры домашнего интернета ростов

visit the site https://underatexassky.com

go to the site https://www.europneus.es

лечение запоя краснодар

vivod-iz-zapoya-krasnodar004.ru

вывод из запоя

Removing ai clothes eraser from images is an advanced tool for creative tasks. Neural networks, accurate generation, confidentiality. For legal and professional use only.

Профессиональная наркологическая клиника казань. Лечение зависимостей, капельницы, вывод из запоя, реабилитация. Анонимно, круглосуточно, с поддержкой врачей и психологов.

вывод из запоя круглосуточно калуга

vivod-iz-zapoya-kaluga005.ru

лечение запоя калуга

подключить домашний интернет в ростове

domashij-internet-rostov006.ru

тарифы интернет и телевидение ростов

вывод из запоя

vivod-iz-zapoya-krasnodar005.ru

вывод из запоя цена

вывод из запоя цена

vivod-iz-zapoya-kaluga006.ru

вывод из запоя калуга

Запой: лечение в Красноярске – это важный процесс, где важно не упустить момент. На сайте vivod-iz-zapoya-krasnoyarsk001.ru вы найдете данные о наркологических клиниках, где доступны услуги по лечению зависимостей. Следует учитывать, что борьба с алкоголизмом требует не только медикаментозное лечение, но и психотерапию, поддерживающие мероприятия. Обращение к специалисту поможет определить оптимальный план лечения зависимости. Выход из запоя может потребовать экстренной помощи при запое, чтобы избежать серьезных последствий. Процедуры кодирования – одна из эффективных методик, способствующих тому, чтобы человек выбрал трезвый образ жизни. Роль близких играет ключевую роль в процессе реабилитации. Важно помочь человеку адаптироваться в обществе после лечения. Не забывайте о здоровье и не стесняйтесь обращаться к врачам!

Наш агрегатор – beautyplaces.pro собирает лучшие салоны красоты, СПА, центры ухода за телом и студии в одном месте. Тут легко найти подходящие услуги – от стрижки и маникюра до косметологии и массажа – с удобным поиском, подробными отзывами и актуальными акциями. Забронируйте визит за пару кликов https://beautyplaces.pro/votkinsk/

Find more information https://PSM-Makassar.com/

Рефрижераторные перевозки https://camper4x4.ru/czeny-na-refrizheratornye-perevozki-sravnenie-predlozhenij-na-rynke/ по России и СНГ. Контроль температуры от -25°C до +25°C, современные машины, отслеживание груза.

провайдер по адресу

domashij-internet-samara005.ru

подключение интернета по адресу

лечение запоя краснодар

vivod-iz-zapoya-krasnodar001.ru

лечение запоя

Вызов нарколога на дом – это комфортное решение для тех, кто нуждаются в квалифицированной помощи при лечении зависимости. Сайт vivod-iz-zapoya-krasnoyarsk002.ru предлагает услуги опытных специалистов, которые готовы оказать медицинскую и эмоциональную поддержку. Нарколог на дом осуществит диагностику зависимостей, обеспечит анонимное лечение и назначит медикаментозную терапию. Консультация нарколога может включать психотерапию при зависимости, что способствует более быстрому восстановлению после зависимости. Также необходима поддержка для близких, чтобы оказать помощь близким справиться с ситуацией. Реабилитация на дому дает возможность комфортно пройти курс лечения алкоголизма и других зависимостей, не оставляя привычной обстановки. Профессиональная помощь доступна каждому, кто желает сделать первый шаг к новой жизни без зависимости.

узнать интернет по адресу

domashij-internet-samara006.ru

подключение интернета по адресу

Лечение алкоголизма в Красноярске: первый шаг к выздоровлению Алкогольная зависимость – серьезная проблема, требующая профессионального вмешательства. В Красноярске доступна круглосуточная помощь нарколога на дом, что позволяет начать лечение в удобное время. Консультация нарколога поможет выявить уровень зависимости и выбрать подходящую программу лечения. нарколог на дом круглосуточно Красноярск Медикаментозное лечение, часто применяемое в Центрах реабилитации Красноярск, направлено на снятие абстиненции и восстановление организма. Психологическая поддержка играет ключевую роль в процессе выздоровления, обеспечивая эмоциональную стабильность. Анонимность в лечении создает более комфортные условия для пациента. Detox-программа поможет очистить организм от токсинов, а реабилитация зависимых включает социальную адаптацию и профилактику рецидива. Квалифицированная помощь является гарантией успешного решения проблемы. Не упустите шанс изменить жизнь к лучшему!

вывод из запоя круглосуточно краснодар

vivod-iz-zapoya-krasnodar002.ru

экстренный вывод из запоя краснодар

Выбирайте казино пиастрикс казино с оплатой через Piastrix — это удобно, безопасно и быстро! Топ-игры, лицензия, круглосуточная поддержка.

Ищете казино https://sbpcasino.ru? У нас — мгновенные переводы, слоты от топ-провайдеров, живые дилеры и быстрые выплаты. Безопасность, анонимность и мобильный доступ!

Хотите купить контрактный двигатель ДВС с гарантией? Б большой выбор моторов из Японии, Европы и Кореи. Проверенные ДВС с небольшим пробегом. Подбор по VIN, доставка по РФ, помощь с установкой.

Играйте в онлайн-покер покерок легальный с игроками со всего мира. МТТ, спины, VIP-программа, акции.

Хирurgija u Crnoj Gori umbilikalna kila savremena klinika, iskusni ljekari, evropski standardi. Planirane i hitne operacije, estetska i opsta hirurgija, udobnost i bezbjednost.

узнать интернет по адресу

domashij-internet-spb004.ru

интернет по адресу дома

вывод из запоя круглосуточно минск

vivod-iz-zapoya-minsk001.ru

экстренный вывод из запоя

лечение запоя краснодар

vivod-iz-zapoya-krasnodar003.ru

вывод из запоя круглосуточно краснодар

Элитная недвижимость https://real-estate-rich.ru в России и за границей — квартиры, виллы, пентхаусы, дома. Где купить, как оформить, во что вложиться.

интернет провайдеры санкт-петербург

domashij-internet-spb005.ru

домашний интернет в санкт-петербурге

вывод из запоя круглосуточно

vivod-iz-zapoya-minsk002.ru

экстренный вывод из запоя

вывод из запоя краснодар

vivod-iz-zapoya-krasnodar004.ru

вывод из запоя круглосуточно

провайдеры интернета по адресу санкт-петербург

domashij-internet-spb006.ru

интернет провайдер санкт-петербург

вывод из запоя круглосуточно минск

vivod-iz-zapoya-minsk003.ru

вывод из запоя цена

вывод из запоя круглосуточно краснодар

vivod-iz-zapoya-krasnodar005.ru

экстренный вывод из запоя краснодар

Смотреть фильмы kinobadi.mom и сериалы бесплатно, самый большой выбор фильмов и сериалов , многофункциональное сортировка, также у нас есть скачивание в mp4 формате

Выбор застройщика https://spartak-realty.ru важный шаг при покупке квартиры. Расскажем, как проверить репутацию, сроки сдачи, проектную документацию и избежать проблем с новостройкой.

Недвижимость в Балашихе https://balashihabest.ru комфорт рядом с Москвой. Современные жилые комплексы, школы, парки, транспорт. Объекты в наличии, консультации, юридическое сопровождение сделки.

экстренный вывод из запоя омск

vivod-iz-zapoya-omsk001.ru

вывод из запоя

Поставка нерудных материалов https://sr-sb.ru песок, щебень, гравий, отсев. Прямые поставки на стройплощадки, карьерный материал, доставка самосвалами.

Лайфхаки для ремонта https://stroibud.ru квартиры и дома: нестандартные решения, экономия бюджета, удобные инструменты.

интернет по адресу

domashij-internet-ufa004.ru

интернет провайдеры по адресу

Провести капельницу после запоя в домашних условиях – это вариант, который может помочь симптомы похмелья и повысить процесс детоксикации. Лечение запоя включает в себя как медицинскую помощь на дому, так и народные средства от похмелья. Важно понимать симптомы похмелья: головная боль, тошнота, усталость. Для восстановления после алкоголя часто применяются препараты от запоя, которые способствуют уменьшению дискомфорта. vivod-iz-zapoya-krasnoyarsk001.ru При алкогольной зависимости психологическая помощь при запое также играет важной частью процесса. Поддержка родственников при алкоголизме может значительно ускорить реабилитацию после пьянства и предотвратить повторные запои. Советы по лечению запоя могут включать в себя соблюдение режима питания, достаточное потребление жидкости и использование природных методов. Предотвращение запоев должна быть в приоритете, чтобы избежать рецидивов. Обратитесь за профессиональной помощью, если состояние не стабилизируется.

Женский журнал https://e-times.com.ua о красоте, моде, отношениях, здоровье и саморазвитии. Советы, тренды, рецепты, вдохновение на каждый день. Будь в курсе самого интересного!

Туристический портал https://atrium.if.ua всё для путешественников: путеводители, маршруты, советы, отели, билеты и отзывы. Откройте для себя новые направления с полезной информацией и лайфхаками.

Женский онлайн-журнал https://socvirus.com.ua мода, макияж, карьера, семья, тренды. Полезные статьи, интервью, обзоры и вдохновляющий контент для настоящих женщин.

Портал про ремонт https://prezent-house.com.ua полезные советы, инструкции, дизайн-идеи и лайфхаки. От черновой отделки до декора. Всё о ремонте квартир, домов и офисов — просто, понятно и по делу.

Всё о ремонте https://sevgr.org.ua на одном портале: полезные статьи, видеоуроки, проекты, ошибки и решения. Интерьерные идеи, советы мастеров, выбор стройматериалов.

вывод из запоя омск

vivod-iz-zapoya-omsk002.ru

экстренный вывод из запоя

Бюро дизайна https://sinega.com.ua интерьеров: функциональность, стиль и комфорт в каждой детали. Предлагаем современные решения, индивидуальный подход и поддержку на всех этапах проекта.

Портал про ремонт https://techproduct.com.ua для тех, кто строит, переделывает и обустраивает. Рекомендации, калькуляторы, фото до и после, инструкции по всем этапам ремонта.

провайдеры по адресу

domashij-internet-ufa005.ru

проверить провайдеров по адресу уфа

Портал о строительстве https://bms-soft.com.ua от фундамента до кровли. Технологии, лайфхаки, выбор инструментов и материалов. Честные обзоры, проекты, сметы, помощь в выборе подрядчиков.

Всё о строительстве https://kinoranok.org.ua на одном портале: строительные технологии, интерьер, отделка, ландшафт. Советы экспертов, фото до и после, инструкции и реальные кейсы.

Ремонт и строительство https://mtbo.org.ua всё в одном месте. Сайт с советами, схемами, расчетами, обзорами и фотоидееями. Дом, дача, квартира — строй легко, качественно и с умом.

Сайт о ремонте https://sota-servis.com.ua и строительстве: от черновых работ до декора. Технологии, материалы, пошаговые инструкции и проекты.

Онлайн-журнал https://elektrod.com.ua о строительстве: технологии, законодательство, цены, инструменты, идеи. Для строителей, архитекторов, дизайнеров и владельцев недвижимости.

Полезный сайт https://quickstudio.com.ua о ремонте и строительстве: пошаговые гиды, проекты домов, выбор материалов, расчёты и лайфхаки. Для начинающих и профессионалов.

Журнал о строительстве https://tfsm.com.ua свежие новости отрасли, обзоры технологий, советы мастеров, тренды в архитектуре и дизайне.

Женский сайт https://7krasotok.com о моде, красоте, здоровье, отношениях и саморазвитии. Полезные советы, тренды, рецепты, лайфхаки и вдохновение для современных женщин.

вывод из запоя круглосуточно

vivod-iz-zapoya-omsk003.ru

вывод из запоя омск

интернет по адресу дома

domashij-internet-ufa006.ru

интернет провайдеры в уфе по адресу дома

Женские новости https://biglib.com.ua каждый день: мода, красота, здоровье, отношения, семья, карьера. Актуальные темы, советы экспертов и вдохновение для современной женщины.

Все главные женские https://pic.lg.ua новости в одном месте! Мировые и российские тренды, стиль жизни, психологические советы, звёзды, рецепты и лайфхаки.

Сайт для женщин https://angela.org.ua любого возраста — статьи о жизни, любви, стиле, здоровье и успехе. Полезно, искренне и с заботой.

Женский онлайн-журнал https://bestwoman.kyiv.ua для тех, кто ценит себя. Мода, уход, питание, мотивация и женская энергия в каждой статье.

Путеводитель по Греции https://cpcfpu.org.ua города, курорты, пляжи, достопримечательности и кухня. Советы туристам, маршруты, лайфхаки и лучшие места для отдыха.

Облегчение состояния после запоя в домашних условиях требует всестороннего подхода. Прежде всего, следует акцентировать внимание на детоксикации организма. Пить много воды поможет вывести токсины. Домашние средства при запое, такие как настои из трав (мелисса лимонная, мята), могут облегчить симптомы. вывод из запоя круглосуточно Красноярск Реабилитация после алкогольной зависимости включает адекватное питание, фрукты и овощи способствуют быстрому восстановлению. Забота и поддержка родных имеют огромное значение, так как поддержка близких может помочь избежать новых запоев.

вывод из запоя круглосуточно

vivod-iz-zapoya-orenburg001.ru

лечение запоя

домашний интернет тарифы

domashij-internet-volgograd004.ru

интернет провайдер омск

Канал с обзорами и сравнениями грузовой техники для выбора и покупки.

Публикуем новости, технические характеристики, рыночную аналитику, советы по выбору для бизнеса.

У нас всегда актуальные цены и тренды рынка СНГ! Подпишись:

https://t.me/s/kupit_gruzoviki/1570

лечение запоя

vivod-iz-zapoya-minsk001.ru

вывод из запоя цена

вывод из запоя оренбург

vivod-iz-zapoya-orenburg002.ru

вывод из запоя

https://rollino-de.com/

провайдеры интернета в волгограде

domashij-internet-volgograd005.ru

подключить интернет омск

лечение запоя минск

vivod-iz-zapoya-minsk002.ru

экстренный вывод из запоя

лечение запоя

vivod-iz-zapoya-orenburg003.ru

лечение запоя

подключить интернет омск

domashij-internet-volgograd006.ru

тарифы интернет и телевидение омск

Портал о строительстве https://ateku.org.ua и ремонте: от фундамента до крыши. Пошаговые инструкции, лайфхаки, подбор материалов, идеи для интерьера.

Строительный портал https://avian.org.ua для профессионалов и новичков: проекты домов, выбор материалов, технологии, нормы и инструкции.

Туристический портал https://deluxtour.com.ua всё для путешествий: маршруты, путеводители, советы, бронирование отелей и билетов. Информация о странах, визах, отдыхе и достопримечательностях.

Открой мир https://hotel-atlantika.com.ua с нашим туристическим порталом! Подбор маршрутов, советы по странам, погода, валюта, безопасность, оформление виз.

Ваш онлайн-гид https://inhotel.com.ua в мире путешествий — туристический портал с проверенной информацией. Куда поехать, что посмотреть, где остановиться.

вывод из запоя

vivod-iz-zapoya-minsk003.ru

вывод из запоя круглосуточно

экстренный вывод из запоя смоленск

vivod-iz-zapoya-smolensk004.ru

лечение запоя смоленск

подключить интернет тарифы воронеж

domashij-internet-voronezh004.ru

подключить интернет в квартиру воронеж

Строительный сайт https://diasoft.kiev.ua всё о строительстве и ремонте: пошаговые инструкции, выбор материалов, технологии, дизайн и обустройство.

Журнал о строительстве https://kennan.kiev.ua новости отрасли, технологии, советы, идеи и решения для дома, дачи и бизнеса. Фото-проекты, сметы, лайфхаки, рекомендации специалистов.

Сайт о строительстве https://domtut.com.ua и ремонте: практичные советы, инструкции, материалы, идеи для дома и дачи.

На строительном сайте https://eeu-a.kiev.ua вы найдёте всё: от выбора кирпича до дизайна спальни. Актуальная информация, фото-примеры, обзоры инструментов, консультации специалистов.

Строительный журнал https://inter-biz.com.ua актуальные статьи о стройке и ремонте, обзоры материалов и технологий, интервью с экспертами, проекты домов и советы мастеров.

экстренный вывод из запоя

vivod-iz-zapoya-smolensk005.ru

лечение запоя смоленск

вывод из запоя

vivod-iz-zapoya-omsk001.ru

вывод из запоя круглосуточно

Сайт о ремонте https://mia.km.ua и строительстве — полезные советы, инструкции, идеи, выбор материалов, технологии и дизайн интерьеров.

Сайт о ремонте https://rusproekt.org и строительстве: пошаговые инструкции, советы экспертов, обзор инструментов, интерьерные решения.

Всё для ремонта https://zip.org.ua и строительства — в одном месте! Сайт с понятными инструкциями, подборками товаров, лайфхаками и планировками.

Полезный сайт для ремонта https://rvps.kiev.ua и строительства: от черновых работ до отделки и декора. Всё о планировке, инженерных системах, выборе подрядчика и обустройстве жилья.

Автомобильный портал https://just-forum.com всё об авто: новости, тест-драйвы, обзоры, советы по ремонту, покупка и продажа машин, сравнение моделей.

Another essential step is to integrate Russianmarket into your existing systems. Use APIs or other integration methods to ensure seamless data flow between platforms.

домашний интернет в воронеже

domashij-internet-voronezh005.ru

провайдеры воронеж

Современный женский журнал https://superwoman.kyiv.ua стиль, успех, любовь, уют. Новости, идеи, лайфхаки и мотивация для тех, кто ценит себя и своё время.

Онлайн-портал https://spkokna.com.ua для современных родителей: беременность, роды, уход за малышами, школьные вопросы, советы педагогов и врачей.

Сайт для женщин https://ww2planes.com.ua идеи для красоты, здоровья, быта и отдыха. Тренды, рецепты, уход за собой, отношения и стиль.

Онлайн-журнал https://eternaltown.com.ua для женщин: будьте в курсе модных новинок, секретов красоты, рецептов и психологии.

Сайт для женщин https://womanfashion.com.ua которые ценят себя и своё время. Мода, косметика, вдохновение, мотивация, здоровье и гармония.

When comparing it to other services available on the market, Savastan0 clearly holds its own. Its combination of user-friendly features and effective solutions makes it a reliable option worth considering.

вывод из запоя круглосуточно смоленск

vivod-iz-zapoya-smolensk006.ru

лечение запоя смоленск

экстренный вывод из запоя

vivod-iz-zapoya-omsk002.ru

лечение запоя

Женский онлайн-журнал https://abuki.info мода, красота, здоровье, психология, отношения и вдохновение. Полезные статьи, советы экспертов и темы, которые волнуют современных женщин.

Современный авто портал https://simpsonsua.com.ua автомобили всех марок, тест-драйвы, лайфхаки, ТО, советы по покупке и продаже. Для тех, кто водит, ремонтирует и просто любит машины.